Digital Link A¶

from IPython.core.display import HTML

import numpy as np

import matplotlib

import scipy

from scipy.stats import norm

from scipy.stats import binom

import pandas as pd

params = {'figure.figsize':(12,6), # These are plot parameters

'xtick.labelsize': 16,

'ytick.labelsize':16,

'axes.titlesize':18,

'axes.labelsize':18,

'lines.markersize':4,

'legend.fontsize': 20}

matplotlib.rcParams.update(params)

from matplotlib import pyplot as plt

import random

from ipywidgets import *

import numpy.linalg

from IPython.display import display

from IPython.core.display import HTML

from notebook.nbextensions import enable_nbextension

%matplotlib inline

print('The libraries loaded successfully')

The libraries loaded successfully

This chapter discusses useful approximation results: it provides other illustrations of the CLT, shows how the exponential distribution approaches the geometric distribution, and how the Poisson distribution approaches the binomial distribution.

Huffman Encoding¶

Recall that the Huffman encoding assigns a binary string to each symbol in a prefix-free way so that the average number of bits per symbol is minimized. Prefix-free means that one can recover the sequence of symbols uniquely from the concatenation of their codes.

The code below is from http://rosettacode.org/wiki/Huffman_coding.

def encode(symb2freq):

"""Huffman encode the given dict mapping symbols to weights"""

heap = [[wt, [sym, ""]] for sym, wt in symb2freq.items()]

heapify(heap)

while len(heap) > 1:

lo = heappop(heap)

hi = heappop(heap)

for pair in lo[1:]:

pair[1] = '0' + pair[1]

for pair in hi[1:]:

pair[1] = '1' + pair[1]

heappush(heap, [lo[0] + hi[0]] + lo[1:] + hi[1:])

return sorted(heappop(heap)[1:], key=lambda p: (len(p[-1]), p))

def HUFF(txt):

symb2freq = defaultdict(int)

for ch in txt:

symb2freq[ch] += 1

huff = encode(symb2freq)

print ("Symbol\tWeight\tHuffman Code")

for p in huff:

print ((p[0], symb2freq[p[0]], p[1]))

text = widgets.Text(layout=Layout(width='100%'))

display(text)

print('Type an arbitrary text in the box above. Upon "return", you will get the corresponding Huffman code.')

print('')

def handle_submit(sender):

HUFF(txt = text.value)

text.on_submit(handle_submit)

Type an arbitrary text in the box above. Upon "return", you will get the corresponding Huffman code.

Digital Link¶

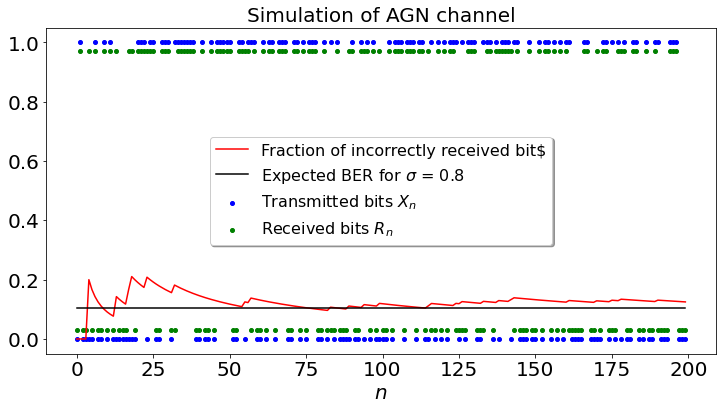

Consider a digital link that operates as follows. The input is a random string of i.i.d. symbols \(X_n\) that are \(B(0.5)\). To send \(X_n\), the transmitter sends the signal \(2X_n - 1\). That is, it sends \(-1\) to transmit a bit \(0\) and \(+1\) to transmit a bit \(1\). The channel adds an i.i.d. \({\cal N}(0, \sigma^2)\) noise \(Z_n\) to \(2X_n - 1\).

By symmetry, the MAP of \(X_n\) given \(Y_n = 2X_n - 1 + Z_n\) is \(R_n = 1\{Y_n > 0\}\). Moreover, the resulting bit error rate (BER) is given by \(P({\cal N}(0,\sigma^2) > 1\).

In the code below, we simulate the link.

def dummy(sigmad):

global sigma

sigma = float(sigmad)

sigmad = widgets.Dropdown(options=['0.2', '0.4', '0.6', '0.8','1','1.2','1.4','1.6','1.8','2.0','2.2'],value='0.8',description='T',disabled=False)

z = widgets.interactive(dummy, sigmad = sigmad)

display(z)

matplotlib.rcParams.update(params)

def DLINK(sigma):

S = 200

x,y,v,s,u = np.zeros([5,S])

F = 1 - norm.cdf(1, 0, sigma)

for i in range(S):

x[i] = np.random.binomial(1,0.5)

y[i] = 2*x[i] - 1 + np.random.normal(0,sigma)

v[i] = (y[i]>0)

u[i] = F

s[0] = 1 - (x[i]==v[i])

for i in range(1,S):

s[i] = (i*s[i-1] + 1 - (v[i]==x[i]))/(i+1)

plt.tick_params(labelsize=20)

plt.plot(s, color='r',label="Fraction of incorrectly received bit$")

plt.scatter(range(200),x, color='b',label="Transmitted bits $X_n$")

plt.scatter(range(200),0.03 + 0.94*v, color='g',label="Received bits $R_n$")

plt.plot(u, color='black',label="Expected BER for $\sigma$ = " + str(sigma))

plt.legend(loc='center', shadow=True, fontsize=16)

plt.xlabel("$n$", fontsize = 20)

plt.title("Simulation of AGN channel", fontsize= 20)

DLINK(sigma)

QPSK¶

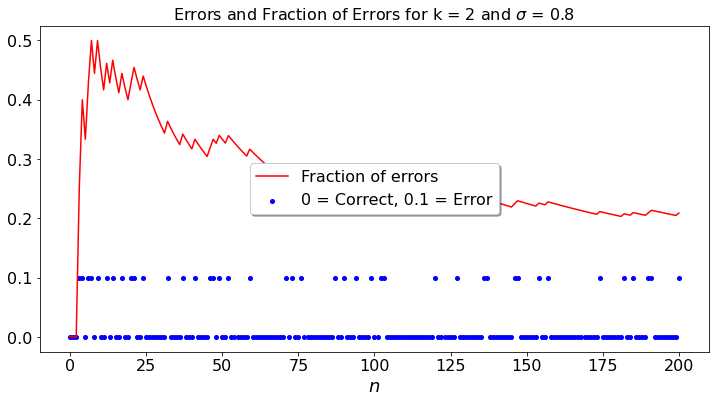

In the previous model, the link transmits two different values: one for each bit \(0\) or \(1\). Modern links use a more efficient scheme. For instance, quadrature phase shift keying (QPSK) transmits \(k\) bits at a time. Each \(k\)-bit string is transmitted as one of \(2^k\) different signals. Each signal has the form \(a_i \cos(2 \pi ft) + b_i \sin(2 \pi ft)\) where \((a_i,b_i)\) depend on the bit string. Thus, there are \(2^k\) different pairs \((a_i, b_i)\), one for each of the \(2^k\) string of \(k\) bits. The transmitter sends that signal for \(T\) seconds. The receiver measures the coefficients \((a, b)\) and determines which of the \(k\)-bit strings was transmitted. Because of noise, when the transmitter sends a pair \((a_i, b_i)\), the receiver gets \((a', b') = (a_i + v_1, b_i + v_2)\) where \(v_1\) and \(v_2\) are independent \({\cal N}(0, \sigma^2)\) random variables. The receiver determines the pair \((a_j, b_j)\) that is closest to \((a', b')\). If this pair is \((a_i, b_i)\), the receiver guesses correctly the \(k\) bits that the transmitter sent. Otherwise, the receiver makes a mistake. The figure below illustrates the process.

In the figure where \(k = 3\), the transmitter sends the bits \(000\) by choosing the values \(a = 1, b = 0\). Noise modifies these values and the receiver gets the pair \((a', b')\) indicated by the red point. The receiver finds which of the eight pairs \((a_i, b_i)\) is closest to the red point. In this example, this is the green pair that corresponds to the string \(001\). Thus, the receiver makes a mistake.

The code below simulates the process. At each step, the transmitter sends one of the \(K = 2^k\) pairs, with equal probabilities. The noise perturbs that pair. The receiver makes a mistake if it receives a pair that is closer to a point different from the one transmitted. For the simulation, by symmetry, the transmitter always sends \((1, 0)\). The receiver gets \((1 + v_1, v_2)\) and finds the value of \(i\) that minimizes \(\|(a_i, b_i) - (1 + v_1, v_2)\|^2\) where \(a_i = \cos(2\pi i/K)\) and \(b_i = \sin(2 \pi i/K)\) for \(i = 0, 1, \ldots, K-1\). The receiver does not make a mistake if the minimizer is \(i = 0\).

To compare the error rate of different values of \(k\), one has to note that the variance of the noise should be \(\sigma^2/k\). The reason is that the receiver computes the average value of the signal over the duration \(T\) of the transmission of the bit string. This duration increases in proportion to \(k\). Consequently, the average value of the noise looks like \((Z_1 + \cdots + Z_k)/k\) where the \(Z_j\) are the values of the noise during each bit transmission. If the variance of each \(Z_j\) is \(\sigma^2\), the variance of \((Z_1 + \cdots + Z_k)/k\) is \(\sigma^2/k\).

def dummy(sigmad,kd):

global sigma,k

sigma, k = float(sigmad), int(kd)

sigmad = widgets.Dropdown(options=['0.2', '0.4', '0.6', '0.8','1','1.2','1.4','1.6','1.8','2.0','2.2'],value='0.8',description='$\sigma$',disabled=False)

kd = widgets.Dropdown(options=['1', '2', '3', '4','5'],value='2',description='k',disabled=False)

z = widgets.interactive(dummy, sigmad = sigmad, kd = kd)

display(z)

def QPSK(sig,k):

N = 200

s,c = np.zeros([2,N+1])

K = int(2**k)

a1,a2 = np.zeros([2,K]) # x,y-coordinate of dots

e = np.arange(0.0,K) # errors

g = 2*np.pi/K

for i in range (0,K):

a1[i] = np.cos(i*g)

a2[i] = np.sin(i*g)

A = sig/(k**0.5)

for n in range(0,N):

for i in range(0,K):

e[i] = (1+np.random.normal(0,A) - a1[i])**2 + (np.random.normal(0,A) - a2[i])**2

y = np.argmin(e)

s[n+1] = ((n+1)*s[n] + 1 - (y == 0))/(n+2)

c[n+1] = 1 - (y == 0)

plt.tick_params(labelsize=16)

plt.plot(s, color='r',label="Fraction of errors" )

plt.scatter(range(201),0.1*c, color='b',label="0 = Correct, 0.1 = Error")

plt.legend(loc='center', shadow=True, fontsize=16)

plt.xlabel("$n$")

plt.title("Errors and Fraction of Errors for k = " + str(k) + " and $\sigma$ = " + str(sig), fontsize= 16)

QPSK(sigma,k)